How to Build the Perfect Testing Infrastructure

Learn how a robust testing infrastructure enables development teams to excel and gives engineers the freedom to to concentrate on their core competency: creating innovative features and delivering them efficiently and securely to end-users.

While once viewed as a tedious chore, testing has evolved over the past decade to become one of the most interesting and enjoyable aspects of the job for engineering teams. Likewise, QA and engineering—once like oil and water—have since joined forces to form a fruitful collaboration, which today encompasses automation engineers, platform teams, and development experience groups in thousands of organizations worldwide.

There have been many factors driving this change, including better testing frameworks, the growing popularity of test-driven development (TDD) and behavioral-driven development (BDD) paradigms, as well as the influx of CI platforms and availability.

Engineering projects are often worked on by many diverse teams, which must evolve with the organization over time. This is particularly true when it comes to product quality. The project should be built in a way that ensures it is both easy to use and maintain.

In this article, we walk through the steps to building the ultimate testing infrastructure, using Python and pytest as an example.

Testing Overview

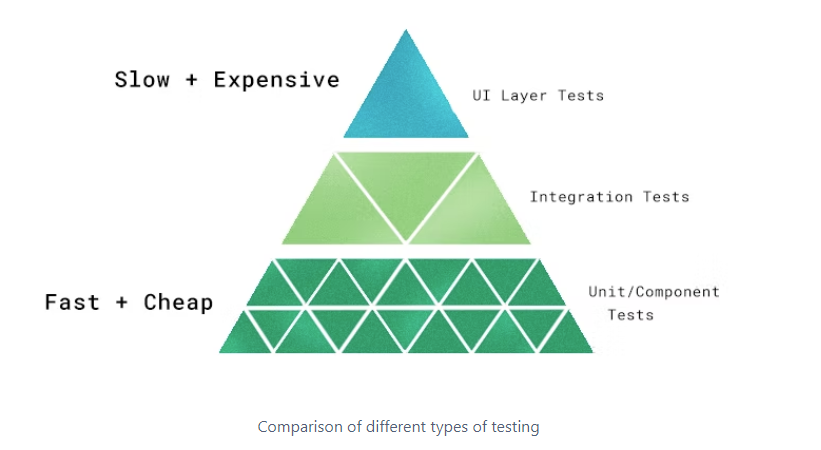

Though every organization and engineer has their preferred flavor of testing, this generally breaks down into three different kinds of tests, as represented in Figure 1 below.

- UI (e2e) tests: This type of testing relates to the solution as a whole, as a single black box, interacting with it the same way the user would.

- Integration (component) tests: These tests treat a single component as a black box, interacting with it as would other components.

- Unit tests: These tests are very code-aware and interact with the smallest components of the solution, testing for each individual edge case.

Unit tests are usually the fastest. The downside is that they tend to miss big-picture bugs and bugs that propagate across multiple features. This makes component and e2e tests mandatory for every good testing environment, and this is where things often become complicated.

In this article, we will focus on component tests, though all of the steps demonstrated are also applicable to e2e tests.

Setting Up Your Testing Infrastructure

In order to understand how to build the perfect infrastructure, first, we’ll need a project to build the infrastructure for. In our case, we’ll call our project “Labrador.”

Let’s take a look at it:

A few things you’ll notice right away:

- We’re using Poetry to manage our project, which is strongly recommended if you’re using Python. Poetry is an awesome tool, and the 1.2.0 release has taken it to a whole 'nother level.

- We have two basic FastAPI microservices, web_server1 and web_server2, each of which has a Dockerfile that runs it and listens on port 80.

Testing: What Not to Do

Before we get into the “dry” details of fundamentals and strategies, let’s observe the following tests from <span id="code" class="code" style="color: #6036FB;" fs-richtext-element="code">tests/naive/test_component.py:</span>

This is an example of a simplistic testing approach. A few things worth noting:

- Each test has to setup and teardown its own environment.

- This involves great effort from the developer.

- There are multiple recurring lines.

- There is significant overprovisioning of resources.

- It is slow and impossible to parallel. - Exception (failed test) means teardown might never happen.

- Difficult analysis: Since we don’t want to setup/teardown multiple times, we end up with convoluted tests that fail frequently.

Now that we’ve seen what can go wrong, let’s look at how to do things right.

Testing Done Right

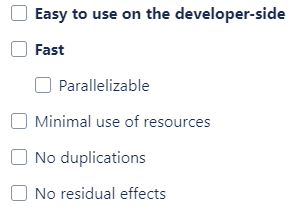

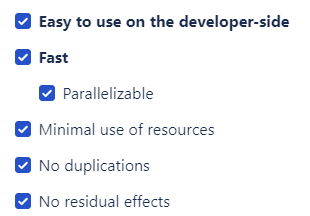

An effective testing infrastructure needs to check the following boxes:

A testing infrastructure that’s difficult to use causes engineers to cut corners and test only the most straight-forward cases.

A slow testing infrastructure slows down the entire engineering team through the CI.

Let’s attempt to tackle each of these issues until we reach true velocity.

Fixtures

“A test fixture is an environment used to consistently test some item, device, or piece of software.” Thanks, Wikipedia.😉

pytest introduces fixtures to help setup and teardown environments for tests without having to manage them ourselves per test. An example can be seen in tests/fixture/test_component.py:-

The code is similar to what we’ve seen previously. Here, however, we perform the setup and teardown just once, and yield to the test in line 14. This enables us to write tests like this:

- Each test is much more self-contained, only dealing with execution and assertion.

- Different cases are now checked using different tests, allowing for greater debuggability.

- Even if a test fails, teardown still happens, guaranteeing no leftover containers.

- The “web” fixture yields the server port, allowing for greater flexibility if we require more port options (due to parallelization/multiple services, for example).

Another neat trick is scoping, which allows us to run a fixture just once for multiple tests, depending on the scope. Such an example can be seen in: <span id="code" class="code" style="color: #6036FB;" fs-richtext-element="code">tests/fixture/test_component_with_scope.py.</span>

Try running both of these files to see which is faster! ⏩

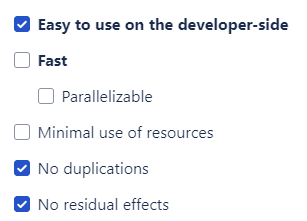

Status

Resource Separation

What happens if we want to run both servers at the same time?

Both are using port 80; even if we assign a different port for one of them, what will happen when we add another server? What happens if we want to spin up multiple instances?

Now, using unused_tcp_port_factory fixture (implemented originally in

GitHub - pytest-dev/pytest-asyncio: Pytest support for asyncio), we see how we can use both servers without issue in tests/resources/test_component.py.

This offers the following advantages:

- Use of multiple resources without altering these resources during testing

- Baseline for parallelization

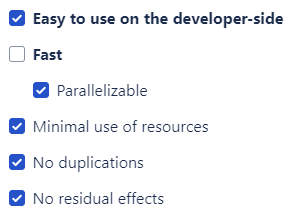

Status

Parallelization

Parallelization (in testing) is the concept of running multiple tests at once. This may sound trivial, but it is an ongoing and complicated process.

It’s very easy for a single developer to leave residual effects that affect other developers (e.g., file system artifacts, open ports, or state-machine alteration). So if allowing tests to run in parallel, you need both a reliable architecture—which is less likely to break and easier to maintain—and from the R&D perspective, you need a good engineering team to make sure that no parallelization breaking tests are introduced—which is achieved through rigorous CRs and education.

Additionally, some features (like fixtures) may inherently be difficult to parallel, such as when using a database or Redis container.

It’s highly recommended to start parallelizing as soon as possible in order to avoid blockers and to address issues as they occur.

In pytest, parallelization is most commonly done using pytest-xdist.

Try running the same suite using pytest -n auto tests/, and see the results.

Status

Summary

For engineers, it’s about much more than just the tech level and achievements of the development organizations they’re employed at; it’s also about how rewarding their job is, as they are simply passionate about what they do.

Having a sound testing infrastructure empowers development organizations, allowing engineers to focus on what they do best—developing amazing features, and ensuring they make it fast and safely to production.

Go ahead, dazzle us—just make sure it works! 🚀